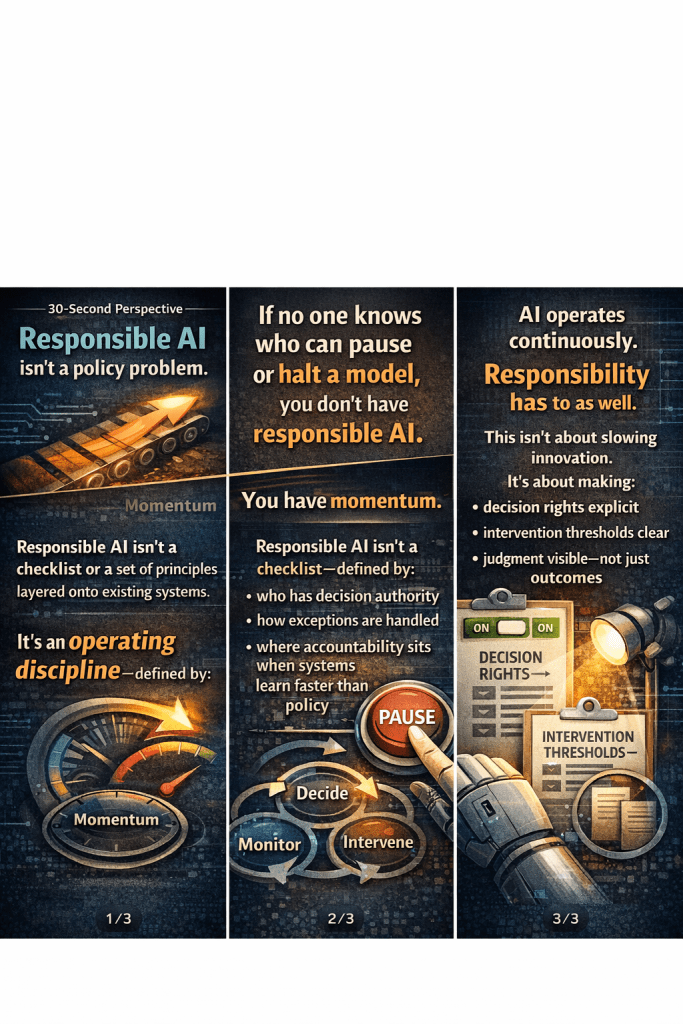

30-Second Perspective — Responsible AI as an Operating Discipline

Most organizations don’t fail at responsible AI because of bad intent. They fail because responsibility isn’t designed into how decisions actually get made.

Responsible AI isn’t a checklist or a set of principles layered onto existing systems. It’s an operating discipline, defined by who has authority, how exceptions are handled, and where accountability sits when systems learn faster than policies evolve.

If no one knows who can pause or halt a model, you don’t have responsible AI. You have momentum.

When responsibility lives in policies, review boards, or periodic oversight, it can’t keep up with real-time systems.

AI operates continuously. Responsibility has to as well.

This isn’t about slowing innovation. It’s about making decision rights explicit, intervention thresholds clear, and judgment visible, not just outcomes.

Responsible AI isn’t a philosophical stance. It’s enforced through operating design.

Leadership question: Where in your operating model is responsibility explicitly enforced—and where are you relying on it to show up when it matters?

You must be logged in to post a comment.