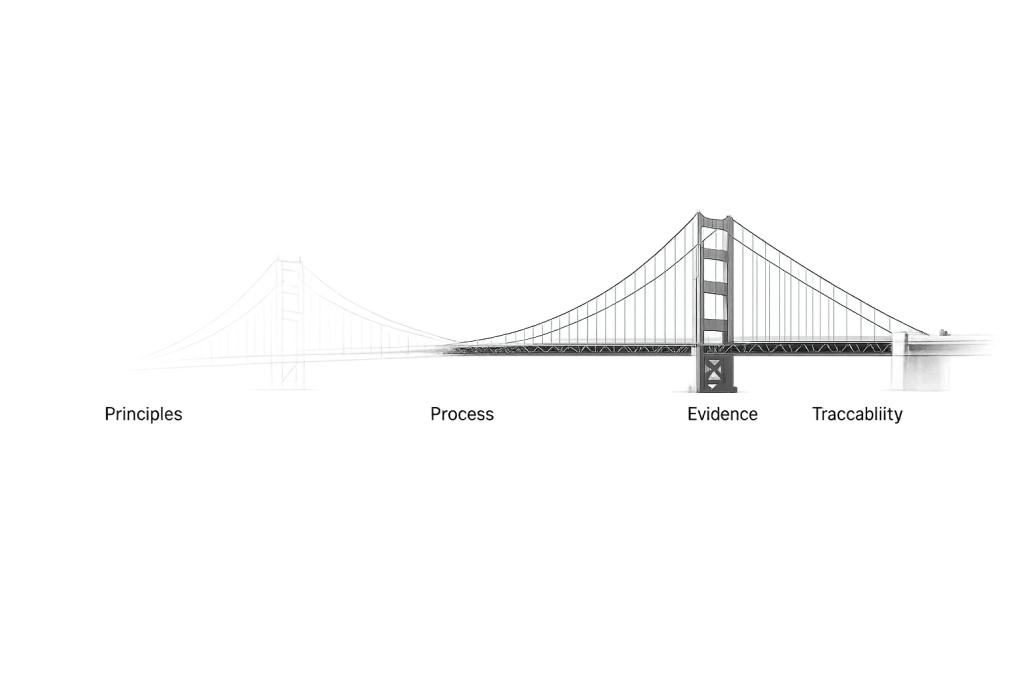

30-Second Perspectives | From Principles to Proof

For the last few years, organizations have invested heavily in AI principles. Ethics statements. Review boards. Responsible AI commitments.

That work mattered. It still does.

But as AI moves from experimentation into daily operations, leadership expectations are changing. The question is no longer whether we believe in responsible AI. It’s whether we can demonstrate it.

This is the quiet shift from intent to evidence.

Governance becomes real only when responsibility is traceable, when decisions leave a trail, when escalation paths are clear, and when there is a defined ability to intervene if a system drifts.

Not because regulators demand it.

Because credibility eventually does.

When outcomes matter, explanations matter. And when explanations matter, documentation stops being bureaucracy and starts being leadership infrastructure.

In this phase of AI adoption, trust isn’t built by what organizations declare. It’s built by what they can show.

Leadership question:

If responsibility were tested tomorrow—not discussed—could your organization demonstrate how its AI systems are governed, or only why it believes they should be?

Leave a comment