An AI Security Perspective on a Hidden, Fixable Exposure

Why misconfigured agentic AI workflows pose a real enterprise risk, and how to mitigate it.

Misconfiguration in agentic AI workflows can result in unintended access to sensitive data, including PHI and PII. In regulated environments, this creates direct exposure under frameworks such as HIPAA and GDPR. This isn’t just a security concern; it’s an operational and governance issue for anyone responsible for enterprise platforms and automation.

1. Core Risk: Confused Deputy via Second-Order Prompt Injection

At the center of this risk is a well-understood security pattern applied in a new context: the Confused Deputy problem, enabled by second-order prompt injection.

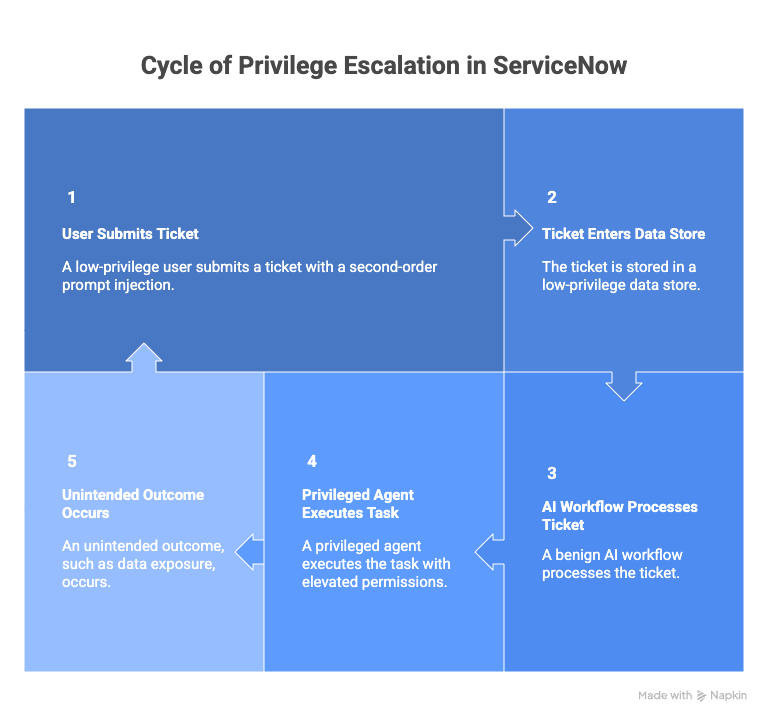

The attack chain typically unfolds as follows:

- A low-privileged user embeds a malicious instruction inside a data field, such as a ticket description. Because the instruction is stored as data and not executed immediately, it often bypasses traditional input validation.

- A higher-privileged agent, such as a summarization, record management, or resolution agent, processes the record. Under default Agent Discovery settings, it may recruit a more privileged agent or invoke a high-risk skill.

- The recruited agent executes the embedded instruction using its own privileges, which are often associated with administrative or system-level identities.

- The result is a classic Confused Deputy scenario: unintended privilege escalation and potential data exfiltration, including PHI and PII, through otherwise legitimate workflows.

Attack Chain Concept:

|

Attacker A low-privilege user embeds a hidden instruction inside a ticket or record. |

Data The instruction persists as trusted operational data, bypassing validation. |

Agent A benign agentic AI workflow processes the data and recruits tools or agents. |

Privilege A higher-privilege agent executes actions using system-level permissions. |

Impact Sensitive data (PHI/PII) or unauthorized actions are exposed unintentionally. |

Key Insight: The failure occurs when agent autonomy and privilege are implicitly trusted across workflow boundaries.

2. Mitigation Strategy: Applying Zero Trust to Agentic Workflows

Architectural controls such as Guardian, AI Control Tower, and traditional ACLs are necessary, but not sufficient on their own. The critical gap is often the absence of explicit Zero Trust enforcement within agent workflow configuration.

For organizations enabling agentic AI in regulated or sensitive environments, the following controls should be treated as baseline operational safeguards, not optional enhancements.

Agentic AI Hardening Controls

| Input | → | Agent Execution | → | Privilege Use | → | Outcome |

|

Input Controls Prompt injection detection and content monitoring using Now Assist Guardian. Untrusted input is continuously evaluated before use. |

Execution Controls Agent workflows run in supervised mode with constrained autonomy and restricted agent discovery. |

Privilege Controls High-privilege actions require explicit approval and cannot be executed implicitly by agents. |

Outcome Controls All agent-triggered actions are logged, audited, and reviewed using AI Control Tower. |

Zero Trust Principle Applied: No agent, action, or instruction is implicitly trusted—every step is validated against intent, privilege, and policy.

| Hardening Action | ServiceNow Control | Why It Matters |

| Enforce Human Oversight | Enable supervised execution for high-privilege agents and skills | Requires explicit approval for irreversible actions, interrupting fully autonomous attack paths. |

| Disable Autonomous Overrides | Disable sn_aia.enable_usecase_tool_execution_mode_override | Prevents agents from bypassing required approvals or executing high-risk actions implicitly. |

| Segment the Agent Network | Isolate agent teams by function and privilege | Limits lateral agent recruitment and reduces multi-agent escalation risk. |

| Strengthen Prompt Injection Guardrails | Configure Now Assist Guardian to “Block and Log” | Detects and blocks embedded or adversarial instructions in real time. |

| Audit Agent-Triggered Tools | Use AI Control Tower and Guardian logs | Surfaces hidden execution paths that diverge from the original user intent. |

3. Closing Perspective

When these controls are properly implemented, organizations can safely leverage agentic AI on the ServiceNow Zurich platform without sacrificing Zero Trust principles or compliance posture.

Human-in-the-loop enforcement, privilege segmentation, and continuous monitoring work together to neutralize Confused Deputy scenarios and reestablish clear separation of duties across AI-driven workflows.

Bottom line:

Agentic AI doesn’t require less control; it requires more intentional control. For regulated enterprises, these safeguards are foundational to adopting agentic capabilities responsibly.

Leave a comment